-

Posts

11 -

Joined

-

Last visited

Content Type

Blogs

Forums

Store

Gallery

Videos

Posts posted by juniez

-

-

Abrash at Valve has some special VR stuff of his own that's even better than the rift.

I'm optimistic about VR. We'll leave it at that for now.

valve's giving away their VR technology to oculus tho

http://www.rockpapershotgun.com/2014/01/17/valve-not-releasing-vr-hardware-giving-tech-to-oculus/

-

MSAA can miss fine detail like power lines as well (and also alpha-tested textures), especially when you have low samples like 2x - in this way postprocess AA can be more reliable as some implementations attempt to recreate that data

the fact that MSAA is faster than SSAA doesn't mean very much when both are incredibly expensive and are destroyed by postprocess effects in performance

so yes, it is technically a hack and sacrifices (minimal) accuracy for great speeds - but then again, so is pretty much everything else in realtime rendering. If it weren't so, we'd all be using unbiased renderers with full radiosity calculations

... and that would be silly

edit:

not that i'm ripping on MSAA, it's great if you can afford it... it's just that postprocess AA can do just as fine with a fraction of the cost - MSAA is certainly not the best in speed

-

1

1

-

-

yes because essentially what SSAA does is take multiple rendering samples per pixel so for example with 2x SSAA, you'd take 2^2 samples for every pixel (representing 2 samples in both axes), so every displayed pixel will be an average of 4 sampled subpixels

it also means that it'll (in its most basic form, that is - MSAA is an optimized implementation of SSAA, described above) actually be rendering 2^2 the amount of pixels which obviously very expensive (and then you get to 4x, which is 4^2 = 16 samples and 8x is 8^2 = 64 and whoa!!! suddenly you're rendering your depth and stencil buffers at 15360x8640 instead of 1920x1080)

on the other hand, postprocess AA like FXAA / SMAA aren't really AA, it's a bunch of clever assumptions to smoothen / blur out detected edges after the initial rendering process (hence the term post-process). It's very fast because it's a rather efficient calculation done after the rendering process, so at most the amount of processed pixels is equal to your native resolution (so for a rendering resolution of 1920x1080, it'll always be 1920x1080 - it's actually impossible to change this because.... you've rendered your scene already). The only bad part is that it's never going to be true AA - it only seeks to imitate its end result........ It gets very close, though (especially for its great performance gains!):

FXAA and SMAA are two postprocess AA techniques, MSAA is, well.... MSAA.

-

1

1

-

-

FXAA and other postprocess antialiasing has its uses - the quality decrease (especially with more recent techniques like smaa) isn't a lot and they're pretty much free compared to the rediculously expensive MSAA

postprocess AA certainly isn't outdated by any means... considering MSAA is older by far and relies on a fancier means of bruteforcing samples

-

marmoset toolbag 2 is PBR though , lol

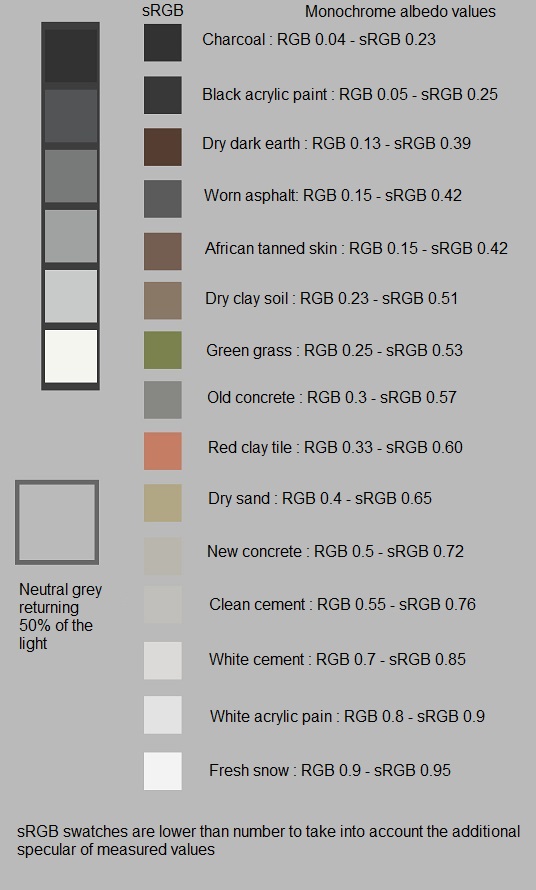

by real values I mean:

using these values in the appropriate albedo / metalness maps, you'll get a readable material under every environment and lighting conditions ( something that can't actually be realized in the traditional lighting model because it doesn't follow the law of energy conservation )

and 20 steps.... isn't that much - most of the complication (it's not even that complicated) in your slides come from their material layering. other than that it's not at all different from the traditional workflow (highpoly -> lowpoly -> bake -> bake editing if needed - > material definition -> wear)

-

2

2

-

-

It's actually very very easy to switch your workflow and doesn't take any extra effort:

albedo -> diffuse WITHOUT BAKED AO / CAVITY, taking into real-life values

metallic -> specular taking into account real-life values

roughness -> specular power (also real life values)

cavity should be generated by your baking program

the only change workflow-wise is that you would use different values consistently for different materials

+ It's impossible to just plug in a truly physically-based renderer within LE3's shader system at the moment - it requires a different lighting model

-

Im' not convinced ,not noticeable when you play some fast FPS game for example.

Specular map with good variation do already a great job, and such system will ask even more GPU i think.

If i could only make a super game with great level and great characters using specular/normal maps, i would be happy

Why should i ask last engine like UDK or last tech GPU if i even can't manage to create great characters and levels with standard shaders ?

But if LE3 proposes such stuff ... indeed i will be interested.

PBR will save you time playing guesswork for the right diffuse / specular values for materials

and layered materials will save you time authoring assets (once you have a decent library of premade materials) AND ON top of that you'll get pretty much infinite texture density and physically accurate area lighting (assuming it's correctly implemented)

it's good stuff..!! and with opengl 4.0 it's not like you're targeting lower-end hardware anyway sooooo

-

1

1

-

-

yesssssss

-

http://blog.selfshadow.com/publications/s2013-shading-course/karis/s2013_pbs_epic_slides.pdf

its the future. get on board yall

-

1

1

-

Lightmaps back ,Global illumination,Image based shaders ?

in Suggestion Box

Posted

a real situation is bound to have shadowmapped lights and shadowmaps performance usually scales with geometry complexity