GUI Resolution Independence 2

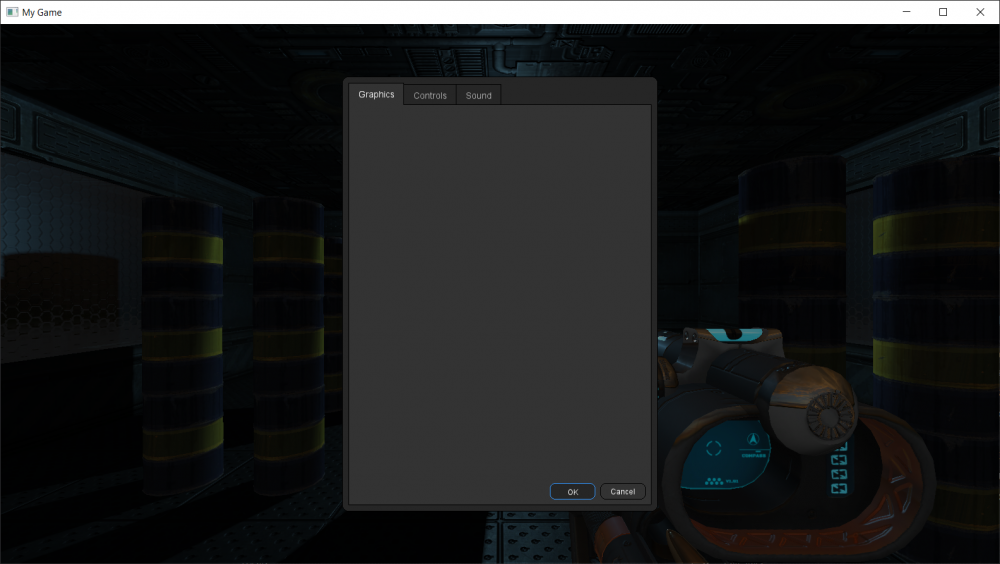

Here are some screenshots showing more complex interface items scaled at different resolutions. First, here is the interface at 100% scaling:

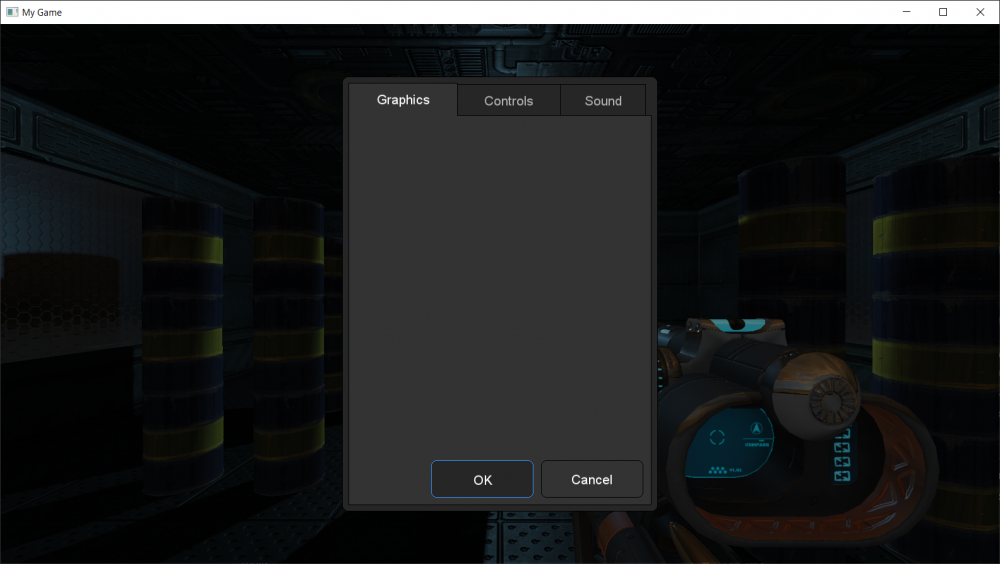

And here is the same interface at the same screen resolution, with the DPI scaling turned up to 150%:

The code to control this is sort of complex, and I don't care. GUI resolution independence is a complicated thing, so the goal should be to create a system that does what it is supposed to do reliably, not to make complicated things simpler at the expense of functionality.

function widget:Draw(x,y,width,height) local scale = self.gui:GetScale() self.primitives[1].size = iVec2(self.size.x, self.size.y - self.tabsize.y * scale) self.primitives[2].size = iVec2(self.size.x, self.size.y - self.tabsize.y * scale) --Tabs local n local tabpos = 0 for n = 1, #self.items do local tw = self:TabWidth(n) * scale if n * 3 > #self.primitives - 2 then self:AddRect(iVec2(tabpos,0), iVec2(tw, self.tabsize.y * scale), self.bordercolor, false, self.itemcornerradius * scale) self:AddRect(iVec2(tabpos+1,1), iVec2(tw, self.tabsize.y * scale) - iVec2(2 * scale,-1 * scale), self.backgroundcolor, false, self.itemcornerradius * scale) self:AddTextRect(self.items[n].text, iVec2(tabpos,0), iVec2(tw, self.tabsize.y*scale), self.textcolor, TEXT_CENTER + TEXT_MIDDLE) end if self:SelectedItem() == n then self.primitives[2 + (n - 1) * 3 + 1].position = iVec2(tabpos, 0) self.primitives[2 + (n - 1) * 3 + 1].size = iVec2(tw, self.tabsize.y * scale) + iVec2(0,2) self.primitives[2 + (n - 1) * 3 + 2].position = iVec2(tabpos + 1, 1) self.primitives[2 + (n - 1) * 3 + 2].color = self.selectedtabcolor self.primitives[2 + (n - 1) * 3 + 2].size = iVec2(tw, self.tabsize.y * scale) - iVec2(2,-1) self.primitives[2 + (n - 1) * 3 + 3].color = self.hoveredtextcolor self.primitives[2 + (n - 1) * 3 + 1].position = iVec2(tabpos,0) self.primitives[2 + (n - 1) * 3 + 2].position = iVec2(tabpos + 1, 1) self.primitives[2 + (n - 1) * 3 + 3].position = iVec2(tabpos,0) else self.primitives[2 + (n - 1) * 3 + 1].size = iVec2(tw, self.tabsize.y * scale) self.primitives[2 + (n - 1) * 3 + 2].color = self.tabcolor self.primitives[2 + (n - 1) * 3 + 2].size = iVec2(tw, self.tabsize.y * scale) - iVec2(2,2) if n == self.hovereditem then self.primitives[2 + (n - 1) * 3 + 3].color = self.hoveredtextcolor else self.primitives[2 + (n - 1) * 3 + 3].color = self.textcolor end self.primitives[2 + (n - 1) * 3 + 1].position = iVec2(tabpos,2) self.primitives[2 + (n - 1) * 3 + 2].position = iVec2(tabpos + 1, 3) self.primitives[2 + (n - 1) * 3 + 3].position = iVec2(tabpos,2) end self.primitives[2 + (n - 1) * 3 + 3].text = self.items[n].text tabpos = tabpos + tw - 2 end end

-

1

1

SCP

SCP

4 Comments

Recommended Comments